Deployment of OpenStack K8S with operators - proof of concept environment¶

Introduction¶

This document describes the process to deploy a Proof of Concept (PoC) OpenStack environment using the OpenStack Kubernetes Operators project as deployment tool. This process is not intended for production usage and some of the components an tools used are in an early development phase open to change.

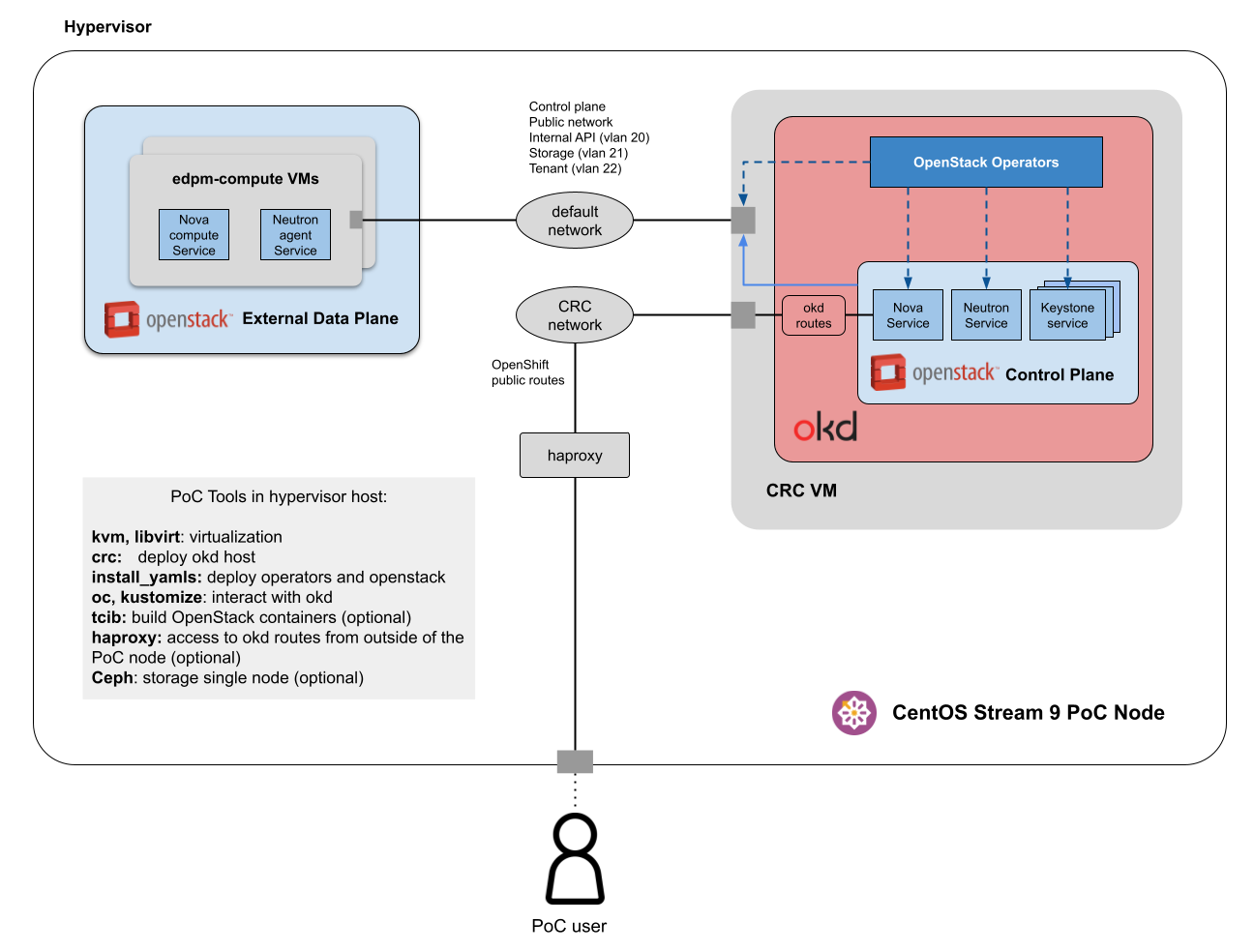

The idea behind this PoC is to deploy an entire OpenStack installation in a single CentOS Stream 9 server. The architecture is shown in folowing diagram:

In following sections, you will find instructions to deploy OpenStack Antelope and Bobcat releases. Note that Antelope is the default release in the upstream OpenStack Kubernetes project while Bobcat is not tested upstream at this point.

The main components are:

- The CentOS Stream 9 PoC server hosts two kvm Virtual Machines, the crc one and a edpm-comppute node. Additionally, some tools used to install the PoC are installed locally in the PoC host.

- The okd cluster will be deployed using CRC (Code Ready Containers) tool which creates a VM to run a one node cluster.

- The OpenStack operators are installed in the okd instance.

- The edpm-compute VM is created from the official CentOS Stream 9 cloud image and will act as nova compute node for the External Data Plane.

- There are two virtual networks in the PoC Server:

- crc network: only the crc vm is attached. The exposed applications in the okd cluster are accessible through this interface.

- default network: both the crc and the edpm-compute VMs are attached. All the OpenStack traffic goes through this interface. In the default configuration, traffic for the internal API, tenant networks and storage access are isolated by VLAN segmentation.

- tcib will be installed in the PoC server in the case that it’s needed to build the containers locally.

- An haproxy service can be installed and configured in the PoC server to provide external access to the okd console, the OpenStack dashboard and APIs.

- Optionally, a single-node ceph cluster installation can be done in the PoC server to be used as Cinder or Glance backend.

Requirements for the PoC server¶

Although the procedure described in this guide has been tested in a virtual machine, it is recommended to use a baremetal server. The minimal resource requirements are:

- 32 GB RAM

- 16 vcpu

- 100 GB disk space

- nested virtualization enabled

The deployment must be performed on Centos 9 Stream.

Install install_yamls and other PoC requirements¶

$ sudo dnf install -y git wget jq make tmux golang

$ mkdir -p ~/.local/bin

$ curl -L https://github.com/okd-project/okd/releases/download/<version>/openshift-client-linux-<version>.tar.gz | tar -zx -C ~/.local/bin/

Install kustomize (required by install_yamls) by downloading tarball with desired version from https://github.com/kubernetes-sigs/kustomize/releases/. Untar and move to bin directory.

$ tar xzvf kustomize_<version>_linux_amd64.tar.gz -C ~/.local/bin/

Install yq (CLI yaml, json and xml processor):

$ wget https://github.com/mikefarah/yq/releases/latest/download/yq_linux_amd64.tar.gz

$ mkdir yq

$ tar xzvf yq_linux_amd64.tar.gz -C yq

$ cp yq/yq_linux_amd64 ~/.local/bin/yq

Clone install_yamls:

$ git clone https://github.com/openstack-k8s-operators/install_yamls.git

Getting started with the CRC¶

All installation details can be found on CRC web page.

- Download latest CRC tarball

- Unpack tarball crc-linux-amd64.tar.xz, create bin dir and move content there

$ tar xvf crc-linux-amd64.tar.xz

$ cp crc-linux-*-amd64/crc ~/.local/bin

$ crc config set preset okd

$ crc config set consent-telemetry no

$ crc config view

$ crc setup

$ crc start -m 23000 -c 8 -d 50

You can review the current configuration with crc config view.

Execution of crc start may take some time. After successful setup of a cluster, the connection can be tested with following commands:

$ eval $(crc oc-env)

$ oc login -u kubeadmin -p <password> https://api.crc.testing:6443

$ oc get pods -A

The password should be visible on stdout after cluster setup. It can be also found with executing crc console --credentials.

At this point CRC is up and running.

Add the required network interface to the crc vm:

$ cd ~/install_yamls/devsetup

$ make crc_attach_default_interface

$ cd ..

$ make crc_storage

After executing it you should see twelve Available persistent volumes with:

$ oc get pv

Note: You can access the crc vm via ssh with command:

$ ssh -i ~/.crc/machines/crc/id_ecdsa -o StrictHostKeyChecking=no core@`crc ip`

Deploy the OpenStack Kubernetes operators¶

Create required secret/CM, used by the services as input:

$ cd ~/install_yamls

$ export GOPROXY="https://proxy.golang.org,direct"

$ make input

$ make openstack OKD=true

$ oc get csv -n openstack-operators

All openstack operators should appear in phase Succeeded, as in:

$ oc get csv -n openstack-operators

NAME DISPLAY VERSION REPLACES PHASE

barbican-operator.v0.0.1 Barbican Operator 0.0.1 Succeeded

cert-manager.v1.13.3 cert-manager 1.13.3 cert-manager.v1.13.1 Succeeded

cinder-operator.v0.0.1 Cinder Operator 0.0.1 Succeeded

designate-operator.v0.0.1 Designate Operator 0.0.1 Succeeded

glance-operator.v0.0.1 Glance Operator 0.0.1 Succeeded

heat-operator.v0.0.1 Heat Operator 0.0.1 Succeeded

horizon-operator.v0.0.1 Horizon Operator 0.0.1 Succeeded

infra-operator.v0.0.1 OpenStack Infra 0.0.1 Succeeded

ironic-operator.v0.0.1 Ironic Operator 0.0.1 Succeeded

keystone-operator.v0.0.1 Keystone Operator 0.0.1 Succeeded

manila-operator.v0.0.1 Manila Operator 0.0.1 Succeeded

mariadb-operator.v0.0.1 MariaDB Operator 0.0.1 Succeeded

metallb-operator.v0.13.11 MetalLB Operator 0.13.11 metallb-operator.v0.13.3 Succeeded

neutron-operator.v0.0.1 Neutron Operator 0.0.1 Succeeded

nova-operator.v0.0.1 Nova Operator 0.0.1 Succeeded

octavia-operator.v0.0.1 Octavia Operator 0.0.1 Succeeded

openstack-ansibleee-operator.v0.0.1 OpenStackAnsibleEE 0.0.1 Succeeded

openstack-baremetal-operator.v0.0.1 OpenStack Baremetal Operator 0.0.1 Succeeded

openstack-operator.v0.0.1 OpenStack 0.0.1 Succeeded

ovn-operator.v0.0.1 OVN Operator 0.0.1 Succeeded

placement-operator.v0.0.1 Placement Operator 0.0.1 Succeeded

rabbitmq-cluster-operator.v0.0.1 RabbitMQ Cluster Operator 0.0.1 Succeeded

swift-operator.v0.0.1 Swift operator 0.0.1 Succeeded

telemetry-operator.v0.0.1 Telemetry Operator 0.0.1 Succeeded

Known issue: in some cases it has been observed that operators installation is stuck at command:

oc wait pod -n metallb-system --for condition=Ready -l control-plane=controller-manager --timeout=300s

Re-runnig the comand make openstack OKD=true has fixed the installation.

Create the EDPM compute node virtual machine¶

Using the install_yamls scripts to install and configure the vm:

$ cd ~/install_yamls/devsetup

$ EDPM_TOTAL_NODES=1 make edpm_compute

Once finished you should see a edpm-compute-0 vm running with command:

$ sudo virsh list

Note: you can acces the compute node vm using:

$ ssh 192.168.122.100 -i ~/install_yamls/out/edpm/ansibleee-ssh-key-id_rsa -l cloud-admin

At this point, you are ready to deploy OpenStack using the operators. In the following sections you will find how to deploy Antelope and Bobcat releases. Note that antelope is the default OpenStack release in OpenStack Kubernetes project while Bobcat is not tested or supported at this point so this procedure requires to build custom containers using RDO Bobcat packages.

Deploy OpenStack Antelope release¶

Deploy the OpenStack control plane¶

Once the operators are installed, the next step is to deploy the Control Plane.

$ make openstack_deploy OKD=true

$ oc get openstackcontrolplane

It will show status Setup Complete when all the services as running.

$ oc get openstackcontrolplane -n openstack

NAME STATUS MESSAGE

openstack-galera-network-isolation True Setup complete

Also, see created resources by using:

$ oc get deployments -n openstack

$ oc get statefulset -n openstack

$ oc get csv -n openstack

$ oc get pods -n openstack -w

Once the process finishes the OpenStack APIs and services are available. However, there are no compute nodes able to run instances yet.

Deploy the OpenStack external data plane¶

Using the dataplane operator.

$ cd ~/install_yamls/

$ DATAPLANE_TOTAL_NODES=1 make edpm_wait_deploy

$ oc get openstackdataplanedeployment

$ oc get openstackdataplanenodeset

You can also find the progress by checking the pods with edpm in its name:

$ oc get pods -n openstack | grep edpm

Deploy OpenStack Bobcat release¶

Build OpenStack containers from RDO Bobcat repository¶

In the PoC server, follow the instructions in the Build OpenStack containers with

TCIB section.

Make sure you use the required options to push the images to the registry running

in the crc okd instance --push --registry default-route-openshift-image-registry.apps-crc.testing.

When the commend finishes you should find all the images as ImageStreams in openstack project:

$ oc get imagestreams --namespace openstack

Deploy the OpenStack control plane¶

Clone the custom Custom Resources for Bobcat installation with the default install_yamls configuration:

$ cd ~

$ git clone https://pagure.io/centos-sig-cloud/rdo_artifacts.git

Deploy the control plane using the Custom Resources included in the repo.

$ cd ~/rdo_artifacts/bobcat-cloudsig-cr/controlplane

$ oc kustomize . |oc create -f -

$ oc get openstackcontrolplane -n openstack

$ oc get deployments -n openstack

$ oc get statefulset -n openstack

$ oc get pods -n openstack

You can monitor the progress by checking the Messages in the openstackcontrolplane object.

$ oc get openstackcontrolplane

It will show status Setup Complete when all the services as running as defined in the Custom Resource.

$ oc get openstackcontrolplane -n openstack

NAME STATUS MESSAGE

openstack-galera-network-isolation True Setup complete

Deploy the OpenStack external data plane¶

In order to deploy the data plane compute nodes using container images from the registry in the okd cluster, some iptables rules are required:

$ sudo iptables -I LIBVIRT_FWO -i virbr0 -o crc -j ACCEPT

$ sudo iptables -I LIBVIRT_FWI -i virbr0 -o crc -j ACCEPT

$ sudo iptables -I LIBVIRT_FWI -i crc -o virbr0 -j ACCEPT

$ sudo iptables -I LIBVIRT_FWO -i crc -o virbr0 -j ACCEPT

$ sudo iptables -I LIBVIRT_FWI -d 192.168.130.11 -o crc -j ACCEPT

$ sudo iptables-save

$ wget https://raw.githubusercontent.com/openstack-k8s-operators/infra-operator/main/config/samples/network_v1beta1_netconfig.yaml

$ oc create -f network_v1beta1_netconfig.yaml

Create the required secrets for the dataplane.

$ cd ~/install_yamls

$ make edpm_deploy_generate_keys

Set the token for kubeadmin project required to pull images from the crc registry. Get the kubeadmin token with command:

$ oc whoami -t

~/rdo_artifacts/bobcat-cloudsig-cr/dataplane/kustomization.yaml replaced

KUBEADMIN_TOKEN by that value.

- op: replace

path: /spec/nodeTemplate/ansible/ansibleVars/edpm_container_registry_logins

value: { default-route-openshift-image-registry.apps-crc.testing: { kubeadmin: '<KUBEADMIN_TOKEN>' }}

And create the openstack dataplane objects using those Custom Resources:

$ cd ~/rdo_artifacts/bobcat-cloudsig-cr/dataplane

$ oc kustomize . |oc create -f -

In this case, you should monitor the status of openstackdataplanedeployment and openstackdataplanenodeset objects

$ oc get openstackdataplanedeployment

$ oc get openstackdataplanenodeset

You can also check the progress by checking the pods with edpm in its name:

$ oc get pods -n openstack | grep edpm

Once your dataplane has been properly deployed, you must add it to the Nova cell:

$ cd ~/install_yamls

$ make edpm_nova_discover_hosts

A message similar to the following should appear:

Found 1 unmapped computes in cell: < ID of the compute node>

Test the OpenStack deployment¶

From this point, the infrastructure should be ready to use. It can be accessed by:

$ oc rsh openstackclient

There, openstack client is installed and configured so you can run any openstack command, as i.e.:

$ openstack endpoint list

For testing purposes new instance can by spawned using edpm_deploy_instance operator:

$ cd devsetup

$ make edpm_deploy_instance

Known issue: it has been observed when deploying this PoC in virtual machines that the validation may timeout while trying to ping tha juste created instance. The root cause of this behavior is that, as the compute node is a nested vm, it uses qemu emulation to run OpenStack instances which take longer to boot that the timeout used in the edpm_deploy_instance make target.

Access OpenStack and OKD externally¶

The OKD and OpenStack deployed following this instructions are only accessible from the systems running into the PoC host. If you wan to access the APIs or dashboards from outside you can install and configure an haproxy to proxy the traffic from the PoC host IP address to the OKD one where all the APIs and web interfaces are published.

Install haproxy:

$ sudo dnf -y install haproxy

Execute following command to allow inbound connections to okd api port 6443.

$ sudo semanage port -a -t http_port_t -p tcp 6443

Replace all the frontend and backends declared in /etc/haproxy/haproxy.cfg with

following content:

frontend apps

bind POC_SERVER_IP:80

bind POC_SERVER_IP:443

option tcplog

mode tcp

default_backend apps

backend apps

mode tcp

balance roundrobin

option ssl-hello-chk

server okd_routes CRC_IP check port 443

frontend okd_api

bind POC_SERVER_IP:6443

option tcplog

mode tcp

default_backend okd_api

backend okd_api

mode tcp

balance roundrobin

option ssl-hello-chk

server okd_api CRC_IP:6443 check

POC_SERVER_API by the IP address of the PoC host and CRC_IP by the address

found with command crc ip.

Start and enable haproxy server:

$ sudo systemctl enable haproxy

$ sudo systemctl start haproxy

/etc/hosts local file:

<POC HOST IP> horizon-openstack.apps-crc.testing barbican-public-openstack.apps-crc.testing cinder-public-openstack.apps-crc.testing glance-default-public-openstack.apps-crc.testing keystone-public-openstack.apps-crc.testing neutron-public-openstack.apps-crc.testing nova-novncproxy-cell1-public-openstack.apps-crc.testing nova-public-openstack.apps-crc.testing placement-public-openstack.apps-crc.testing swift-public-openstack.apps-crc.testing api.crc.testing canary-openshift-ingress-canary.apps-crc.testing console-openshift-console.apps-crc.testing default-route-openshift-image-registry.apps-crc.testing downloads-openshift-console.apps-crc.testing oauth-openshift.apps-crc.testing

- Access the okd web console with a browser to: https://console-openshift-console.apps-crc.testing/

- Access the okd cluster with oc usual commands:

$ oc login -u kubeadmin -p <kubeadmin password> https://api.crc.testing:6443

- Manage the OpenStack cluster using the openstack client:

$ openstack --os-username admin --os-password 12345678 --os-project-name admin --os-user-domain-name Default --os-project-domain-name Default --os-auth-url https://keystone-public-openstack.apps-crc.testing --insecure server list